Bidelman Auditory Cognitive Neuroscience Lab

Projects

Neural mechanisms and plasticity underlying auditory categorization and learning

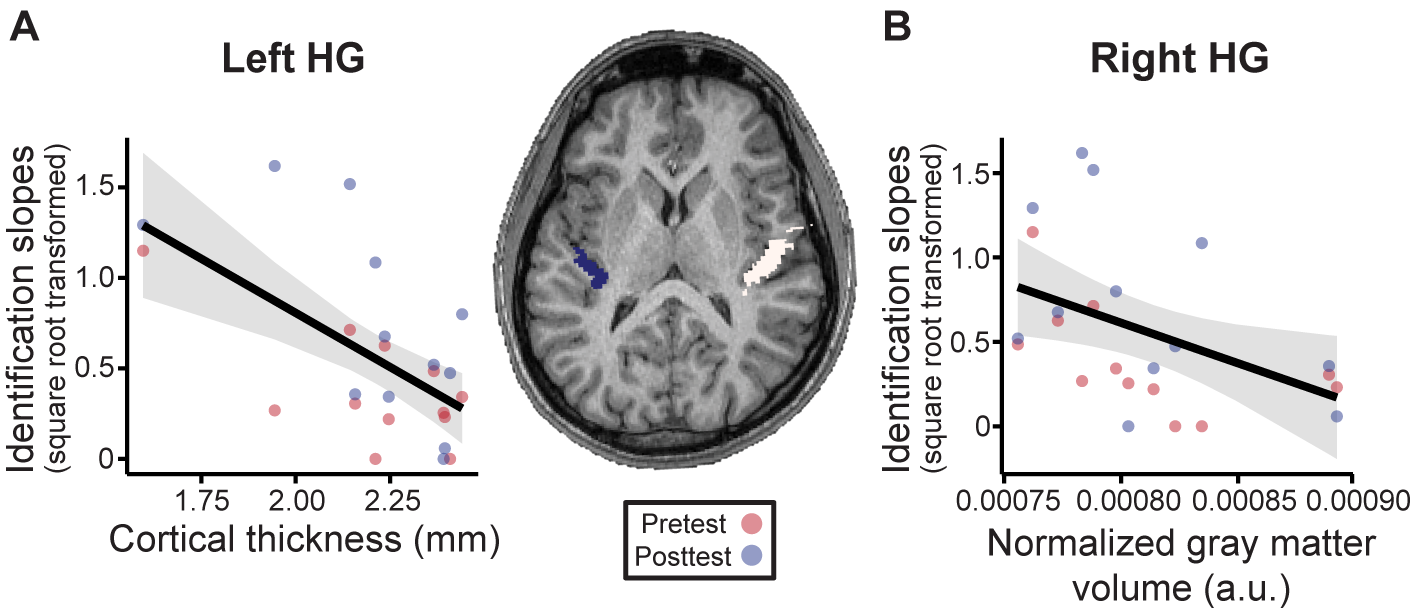

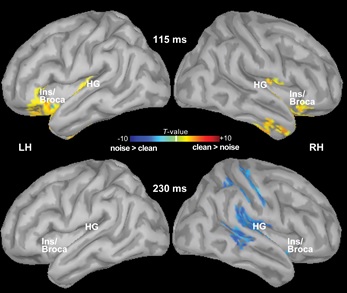

Using novel multivariate classification techniques to "decode" electrical brain responses (EEG), we are examining how the human brain groups sounds and learns to categorize auditory events--the process of categorical perception (CP). We have shown neural correlates of phonetic properties of speech emerge as early as primary auditory cortex (~150 ms after sound onset) in both evoked and induced oscillatory modes of brain activity. This is much earlier and lower in the auditory system than previously thought. We find that speech becomes categorically organized at the neural level as early as primary auditory cortex (PAC) under some circumstances (e.g., highly-experienced listeners). We have also shown that categories are dynamically shaped by experience, goal-directed attention, and stimulus context (e.g., familiarity).

Auditory cognitive aging and hearing across the lifespan

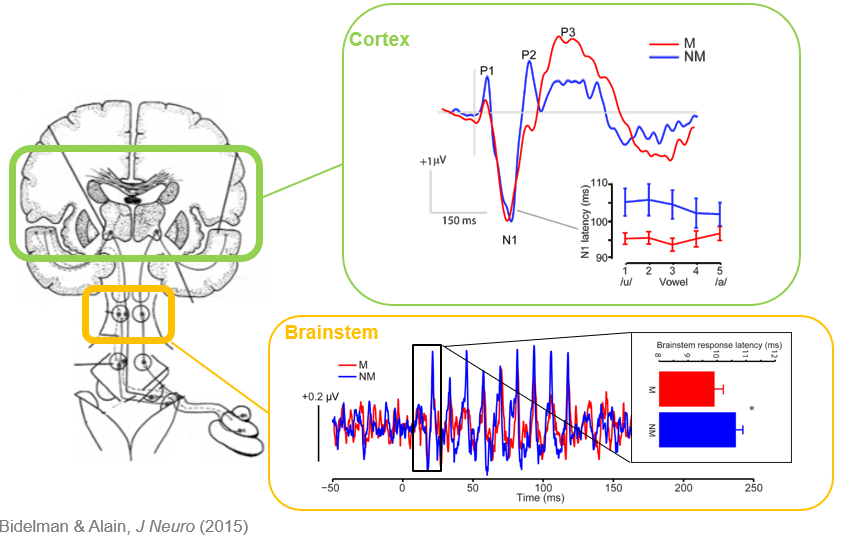

A translational avenue of our research investigates cognitive aging and changes in speech-language function that occur across the lifespan. Our aging studies have revealed that normal age, mild cognitive impairment, and age-related hearing loss impair the functional coupling between brainstem and cortex during speech processing, resulting in more redundancy in the brain's representations for speech across different levels of the neuraxis. This body of work emphasizes that declines in listening skills later in life depend not only on the quality of signal representations within individual brain areas per se, but rather, how information is transmitted between regions of the speech network (i.e., functional connectivity). Promisingly, our plasticity studies suggest that auditory experiences like musical training might help counteract age-related declines in speech processing. Our findings underscore the fact that robust neuroplasticity is not restricted by age and may serve to strengthen speech listening skills that decline across the lifespan.

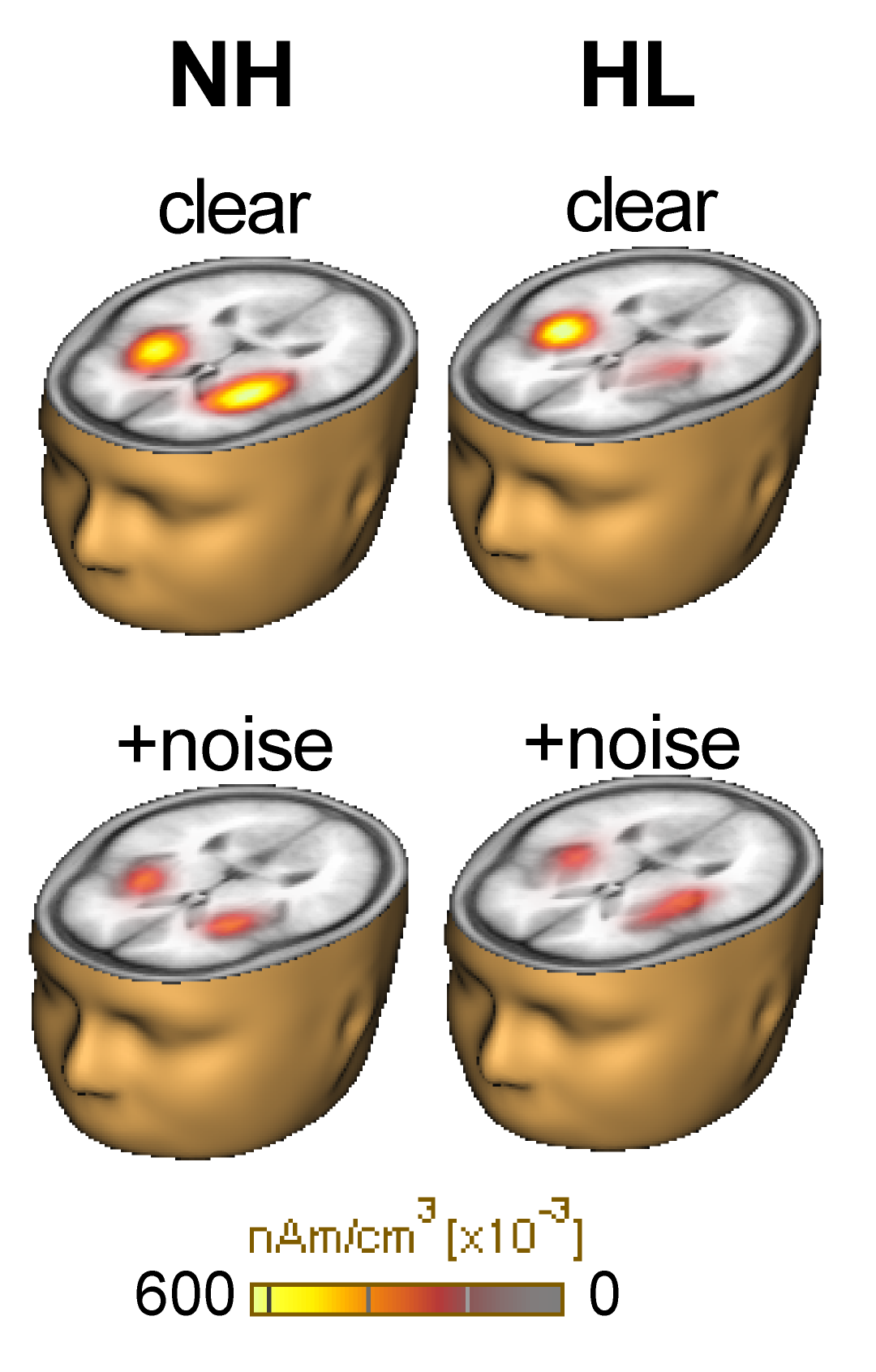

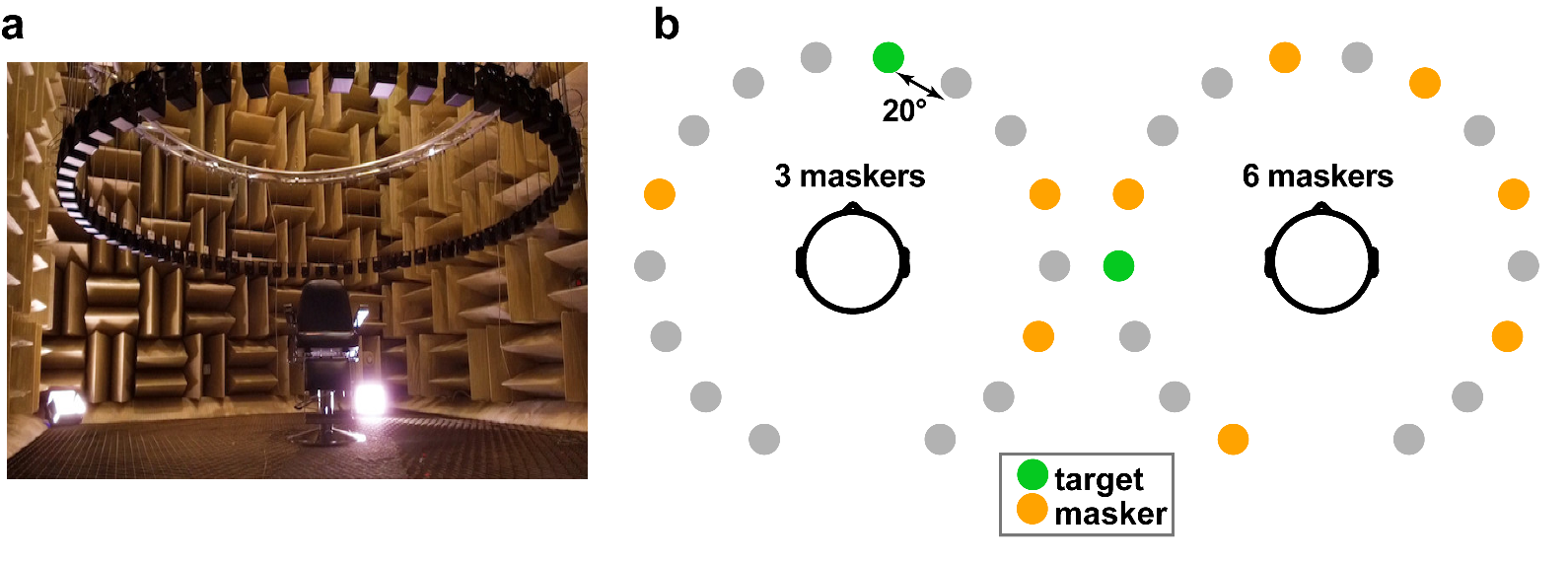

Neural mechanisms of auditory scene analysis

We are investigating the subcortical and cortical mechanisms which guide auditory scene analysis and cocktail party listening. Of interest to the lab is characterizing the neural basis of individual differences in speech-in-noise perception and how SIN listening skills are affected by disorders/impairments (e.g.,hearing loss) and lifelong experiences (e.g., bilingualism, musical training)

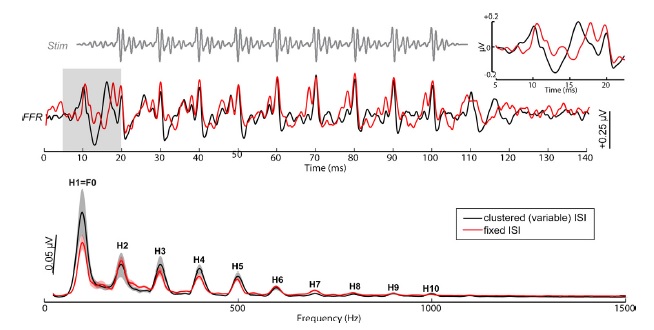

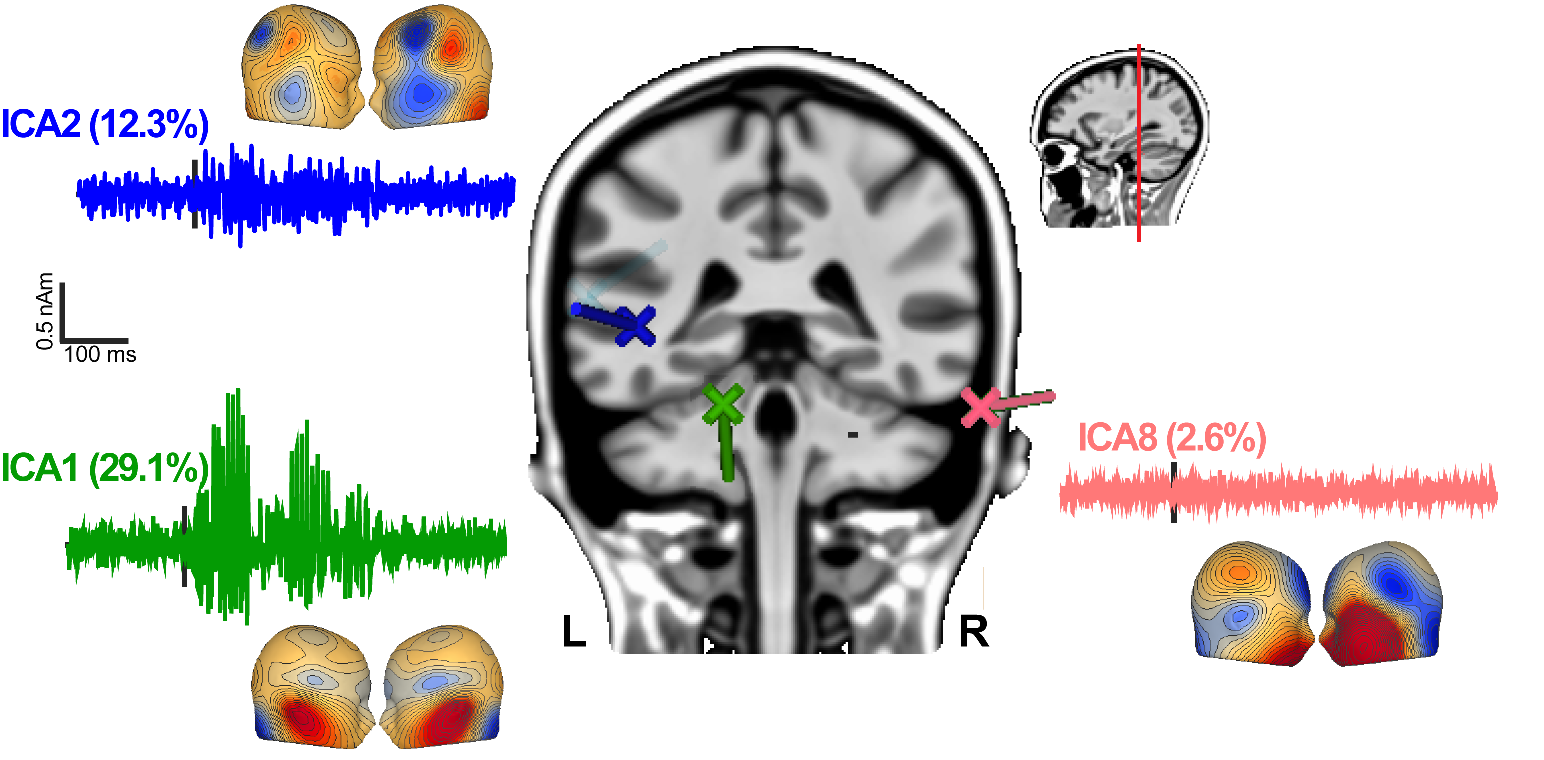

Frequency-following responses (FFRs) and their functional characterizations

We specialize in the recording of the FFR, a sustained phase-locked potential that offer a "neural fingerprint" of sound encoding within the EEG. This neurophonic offers a high-fidelity representation of the speech signal. In fact, listeners can identify speech from these neural responses when they are replayed as auditory stimuli. FFRs are becoming a mainstream tool for understanding the neural encoding of speech, music, and experience-dependent plasticity in auditory processing. Despite potential clinical and empirical utility, FFRs are not widely used. In a series of studies, we are providing comprehensive characterizations of the FFR including its source generators and distinctions from the conventional auditory brainstem response used clinically. The lab has also developed new objective statistics for detecting FFRs as well as optimal stimulus paradigms for eliciting the responses when simultaneously recorded with cortical potentials. The goal of this work is to make this particular auditory evoked potential more available to researchers and clinicians and provide a better understanding of its physiological basis, response characteristics, and relation to other common electrophysiological measures

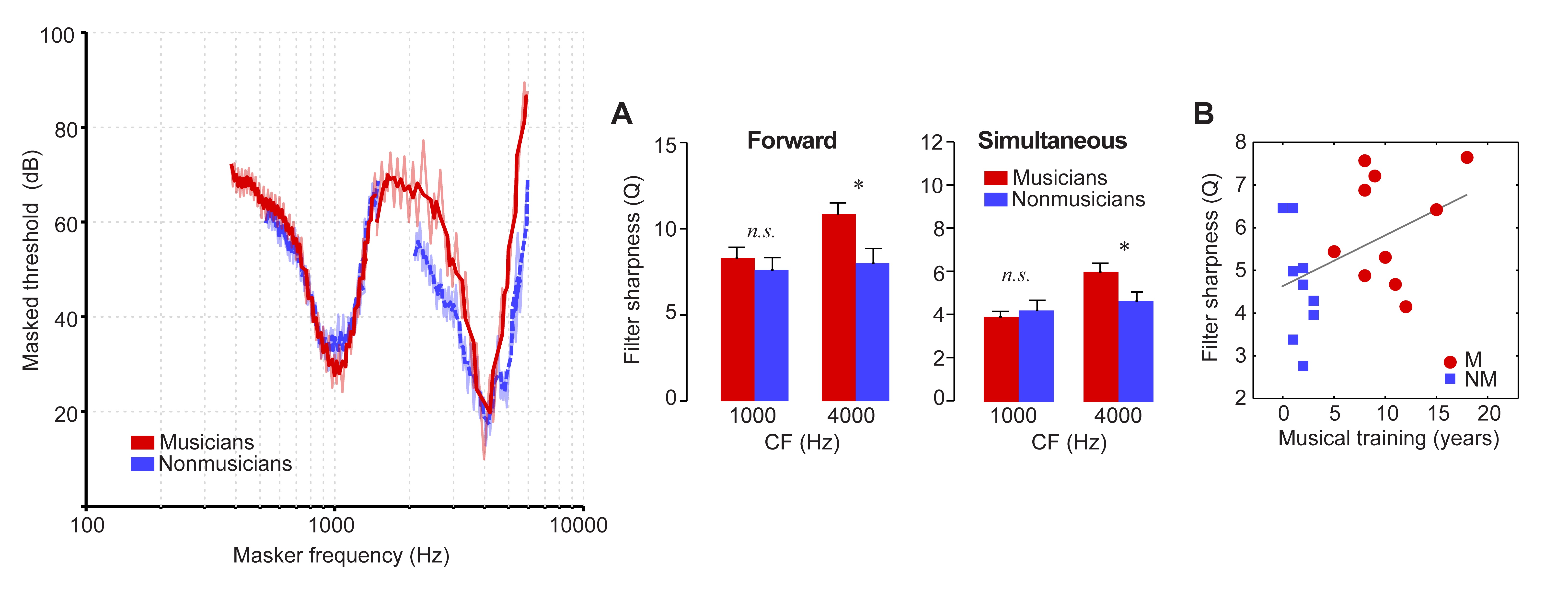

Auditory neuroplasticity

We are investigating how experience and certain forms of training change the brain. Musicians are an exceptional model for studying auditory plasticity given their intense, long-term experience manipulating complex sound information. Our neuroimaging studies demonstrate experience-dependent tuning of the human auditory system with music engagement. Remarkably, musicians' enhancements in brain function are not restricted to music processing; our studies also reveal important benefits to speech and language functions as well as general , non-auditory cognitive abilities (e.g., aspects of memory). We are exploring how music instruction and other forms of experience (e.g., bilingualism) could be used to strengthen speech/language skills and general cognitive abilities across the lifespan.

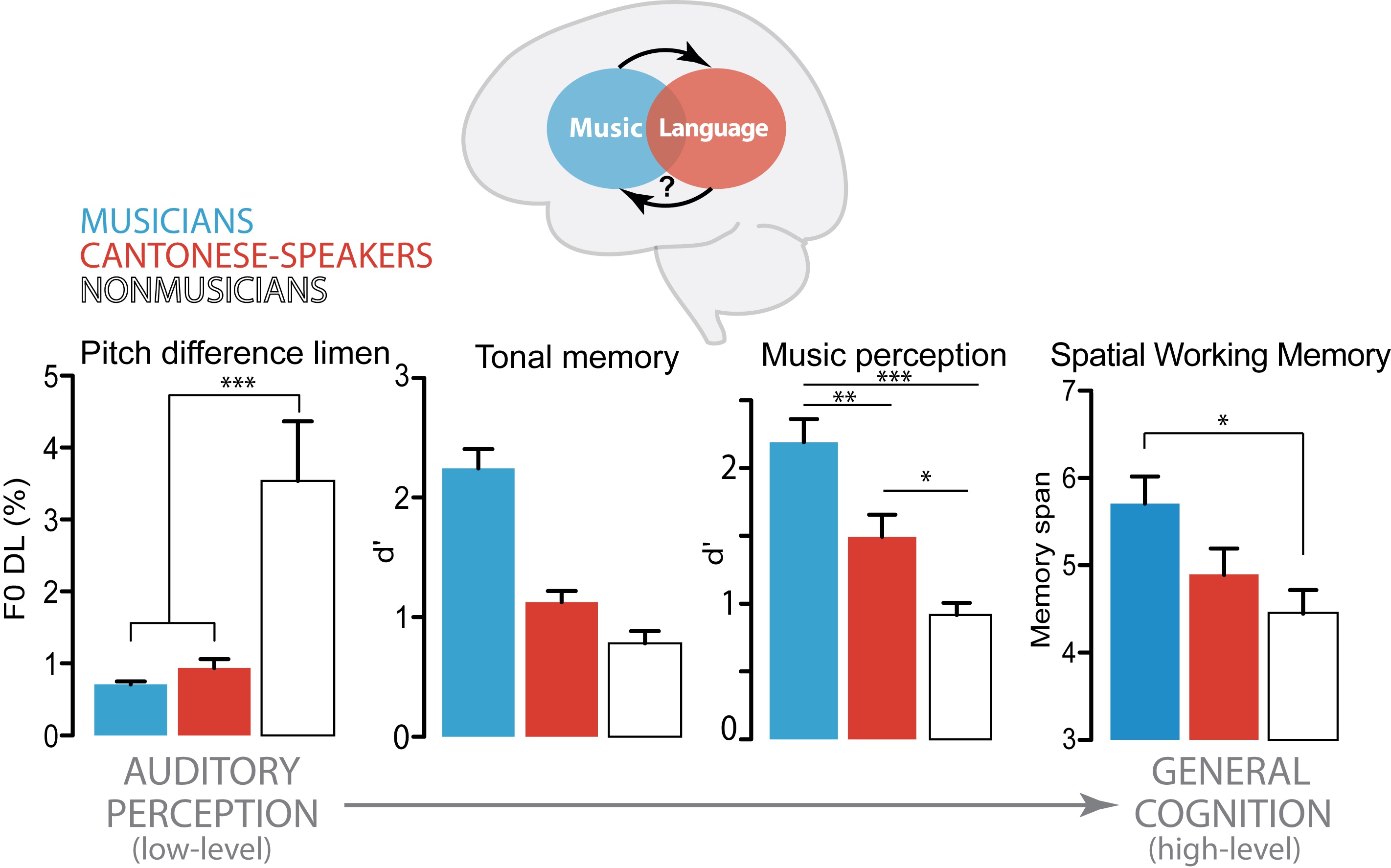

Cross-domain transfer effects between music and language experience

Recent neuroimaging studies suggest that some aspects of music and language are processed by shared brain regions. This overlap suggests the intriguing possibility that experience in one domain (e.g., music training) might transfer to benefit processing in the other domain (e.g., speech). Neurocognitive models suggest the reverse might also be true, i.e., intense language experience improving music listening skills. In both ERP and behavioral studies we are investigating how music and language experience (particularly tone languages) improve the neural processing of music and language signals. Our findings suggest that under some circumstances, transfer from these experiences to the other can be bidirectional (M->L and L->M).